Overview

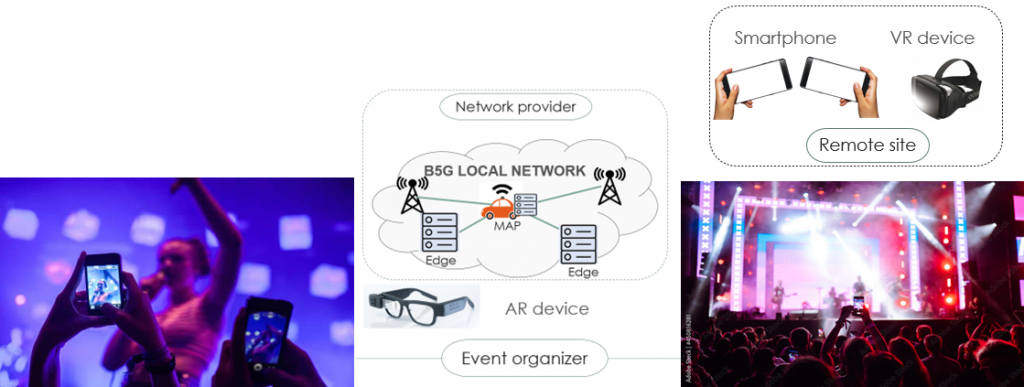

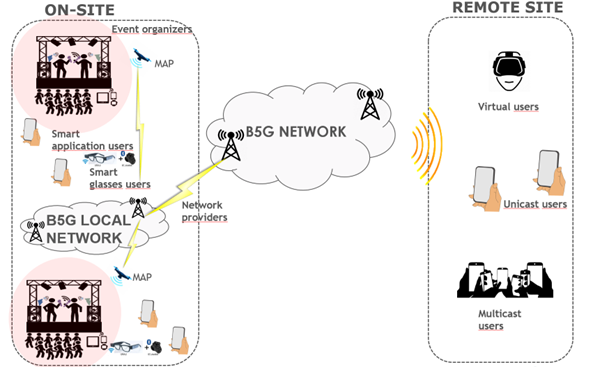

The Enhanced Experience use case scenario focuses on live public events that are characterized by a dense number of local users (participants) as well as remote participants enabling virtual attendance. In such a use case, the underlying mobile network will be stressed by the users accessing their devices and even through live streaming from the site as well as accessing the similar services. As a consequence, a large audience is vying for the same network resources within a small area, which creates a need for dynamic flexibility of the network. In addition, large crowds would move from one venue to another depending on the time and places (e.g., multiple stages attract varying size of audience). In this case, dynamic network coverage is needed to provide seamless connectivity. The DEDICAT 6G solution for Enhanced Experience focuses on these issues and strives to provide richer quality of experience to local spectators as well as delivering enhanced live experience to remote users using B5G/6G networking.

As the Enhanced Experience focuses on reducing the gap between local and remote participants for crowded events, several beneficiaries, a.k.a. stakeholders can be identified depending on the physical location. In the actual event area, the main beneficiaries consist of the local audience. These users are equipped with DEDICAT 6G compatible devices that can enhance their live quality of experience via evolved navigation through smart glasses, real-time announcements of simultaneous shows in different areas (stages) and/or possibility for online streaming of these interests. The local users also possess the ability to perform live streaming using the developed implementation architecture in DEDICAT 6G.

On the consumer side, remote participants are another group of beneficiaries who can possess remote experience of the live event from a remote destination i.e., from their home. This user group is also equipped with smart terminal technology able to get live announcements of live streams as well as browse through the different views of distinct camera sources of the event area. One of the content sources enables live view “see what I see” originating from the smart glasses.

Video service providers can also benefit from the DEDICAT 6G technology in this use case because online users can obtain higher quality to be streamed to end users via novel proactive adaptation against fluctuating network conditions. Mobile multicast can provide not only network savings and even resources such as energy savings, but also improved quality of experience for the end users. Dynamic coverage extension according to the crowd movement will also ensure improved quality, especially on the UL side. Naturally, improved service quality can increase the financial value of the service(s). Virtual participation, especially during COVID-19 can increase the number of remote participants significantly.

Use case stories

The three stories planned for the Enhanced Experience use case take place in two locations: on-site (public venue such as music concert) and in a remote location (user’s home etc.) considered as a means for virtual participation. The stories focus on providing a more efficient DEDICAT 6G technology for on-site participants as well as narrowing the border between physical and virtual presence for such public events. To be precise, Story 1 concentrates on improving the on-site experience, Story 2 enhances the virtual experience remotely, and Story 3 combines the previous stories via live service for remote users.

Story 1: You are participating in a live public event, such as a live music concert with multiple stages, and you are glancing at the event brochure thinking about which artist to see next. Suddenly your mobile phone alerts you and you receive a live video stream from your friend who has found a great position close to the stage of the artist you are also interested in. You begin the navigation according to the stream and find yourself quickly with your friend to watch your favourite band. After a while, you remember that some of your friends are not participating in the concert at all, and you decide to invite your friends. Since you are connected to a smart mobile network cell, which is enabled with the sophisticated DEDICAT 6G technology, you can easily launch a mobile video streaming service with your modern smartphone and high-definition camera even if there are a number of other mobile users competing for the same network resources.

From the brochure, you find that each event is scheduled on a different stage. Viewing the event-stage mapping information, you can move to the target stage and find places to watch the event. Since the network adopting the DEDICAT 6G technology will provide dynamic coverage for connectivity extension, you can connect to the network to share/send video streaming content from anywhere in the event venue.

Story 2: You are staying at home when your friend contacts you via mobile and live streams real-time video from a concert. You live through an enhanced remote experience as if you were amongst the members in the audience by using virtual presence through VR glasses. At some point you receive another stream notification from the event organizers, which is yet another access link to a live stream from the concert. Thus, the high-quality content from static cameras is distributed to a large audience for virtual participation in the event. COVID-19 is an excellent example of such a use case, where the event organizers are not necessarily postponing the event, but instead are live streaming the performances to paid users.

Story 3: This story takes place during a concert in an area (stadium, concert park, etc.) where the concert attendees gather and enjoy the shows (different scenes feature various bands and music styles). Then they engage in live streaming activities. Two actors are involved: the main actor –say user X- is a music concert enthusiast who attends a concert, and the second (category of) actor is one of X’s FaceBookTM live followers. A so-called Connected Car has been deployed at the beginning of the concert in order to increase radio capacity as the event is expected to be the first massive Facebook Live event over 6G.

Human centric applications

The Enhanced Experience holds two main actors: local attendees in the event site and remote attendees in distance. The local spectators can act as content producers i.e., streaming the video from the site, or as consumers for playing the content provided by other users. The latter one can help to navigate stages of interest or watch simultaneously alternative content from their UEs. The content is pushed according to the stories to dedicated video streaming platform developed in the project with the connection to DEDICAT 6G platform for optimizing the service. The dedicated remote users will have the ability to access these services in a secure way. It is also noticeable that on-site users relying on video streaming technology as content produces may influence annoying audio latency, which emphasizes the technology usage mainly for remote attendees.

Different source cameras will be used for generating enhanced virtual experience for the end users. These cameras include normal 2D-cameras originating from USB-based cameras connected to UEs (such as integrated cameras with smartphones) or eXtended Reality (XR) capable cameras (such as 360º) for providing free viewpoint possibility and improved virtual experience. Thus, more complex HW & SW can increase the End-to-End (E2E) latency compared to more traditional solutions. Finally, the Smart Glasses that will also be utilized are depicted next.

Optinvent will provide Smart Glasses in the event site, which will produce content for the end users. Optinvent will provide several ORA-2 smart glasses for each of the events. These devices are running on Android device platform and should be connected each to a local smartphone through a Wi-Fi hotspot to be able to connect to the Internet, since the glasses do not have 4G or 5G intrinsic connectivity.

The glasses are standalone devices that allow users to see bright images while maintaining the transparency of outdoor scene. The glasses have embedded Global Positioning System (GPS) so that position of the user could also be tracked during the event if necessary. The ORA-2 smart glasses use KitKat Android version and are compatible with any existing application from Google Play. The streaming from the glasses could be either directly from the glasses or through the user smartphone (used also as a Wi-Fi hotspot). This point should be assessed in the incoming month to select the best way to stream video from the glasses with minimal latency. To interact with the glasses, a touch pad allows the user to go through a specific menu provided by the application that should be developed for this use case. However, to have better ergonomic of application (APP) and to let the user enjoy and concentrate on the event, an accessory Joystick with Bluetooth connection with the glasses will be used.

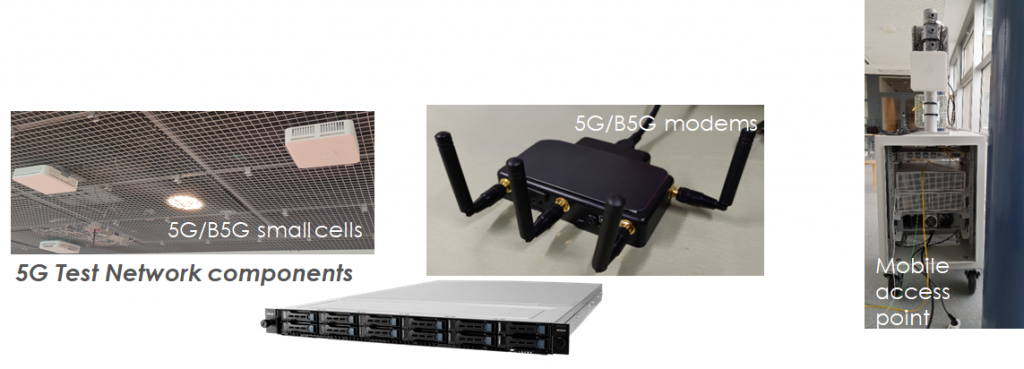

VTT will provide the 5GTN infrastructure for the pilots as well as the multicast/unicast video streaming platform with the necessary applications and UEs, which are needed specially to support the mobile multicast. The unicast service and Facebook LiveTM can be accessed basically with all the modern smartphones, tablets, and laptops. The video transcoding unit associated with video streaming platform will generate the necessary formats (e.g., HLS, MPEG-DASH) for successful and supportive playback in various client devices. The figure on the left below represents the sample tests using Long Term Evolution (LTE) (5G) multicast streaming to three end user devices with pre-encoded content.

The Enhanced Experience live pilot will take place in Oulu, Finland and it is planned Q1/2023

- VTT 5G Test Network(5GTN) infrastructure will be used for the live experiments

- The current status includes first measurements in the 5GTN using the pilot HW, MAP development and integration, and visualization of the overall pilot scenarios